Santa Clara-based Nvidia has been flying high among investors. Last week, it became one of the most valuable companies in the world, at almost three trillion dollars. That's a three with twelve zeroes.

The excitement is driven by one of its products, its line of Graphics Processing Unit (GPU) chips. These very expensive components train the latest generative AI and machine learning models. In today's AI gold rush, Nvidia is selling shovels.

What are these GPUs, and what makes Nvidia's product so valuable?

The computer revolution rides upon the back of the microprocessor, a circuit designed to act as a Central Processing Unit or CPU of a computer. A CPU reads a stream of instructions, a program, and executes them one by one. A typical instruction asks the CPU to read a couple of numbers from memory, perform an addition or multiplication operation on them, and write the results back to memory.

(I talked about the two most-used kinds of microprocessors back in

2020. See Strong Armed, which introduces the

ARM and the x86 architectures).

A program running on a CPU, such as an application on your mobile phone or on a website, runs thousands of such instructions to produce some useful results in the end. Modern microprocessors run very fast, completing billions of instructions in a second.

Like CPUs, GPUs are also circuits designed to execute instructions that manipulate numbers in memory. The key difference is that a GPU instruction can manipulate many numbers at once.

These instructions are different from CPU instructions; instead of adding or multiplying two numbers, they expect to be given a whole array of numbers on which to perform matrix multiplication or similar operations. A single GPU instruction can operate on multiple numbers, producing a set of results in one go. The GPU loads up a small program of such instructions, and runs the program over and over on many numbers.

So, whereas a CPU consumes a stream of instructions, a GPU consumes a stream of data.

GPU instructions are useful for two major kinds of parallel computations.

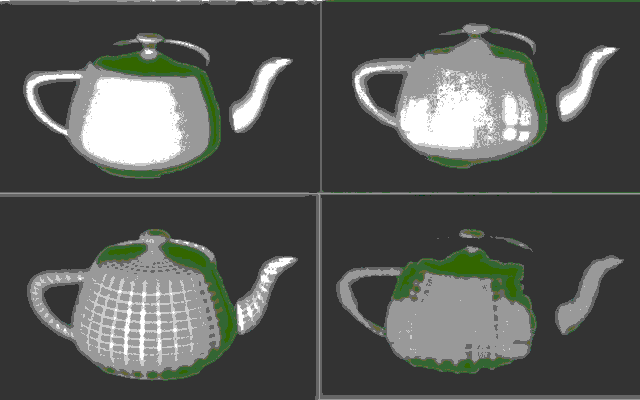

The first kind is simple computations that you need to repeat on many quantities. The original application of this kind was computer graphics, which is why the chips are still called graphics processors.

Movie animations, visual effects, and video games are examples of computer-generated imagery (CGI), which requires repeated computations of the colors of many pixels of an image. As computer displays grow bigger and bigger over the years with more colors per pixel and more pixels, the computational demand grows correspondingly. Particularly for video games, it's important to quickly generate successive images, called frames, to keep the game responsive. This processing requires lots of computations per second. Gamers were the original buyers of expensive GPUs to expand their gaming rigs.

The other kind of parallel computation appears in certain mathematical physics or engineering problems, known as high-performance computing (HPC). The typical tasks here are multiplying or inverting matrices or solving numerical equations.

These operations are routinely used for running large simulations or analyses. If you are producing weather forecasts or simulating the behavior of materials and structures under nonlinear loads, like underwater pipes crushed under hundreds of fathoms of seawater, then you might need these kinds of computations.

A CPU can always perform any task that a GPU can do, provided you give it a suitable program. The CPU program will have to read hundreds of memory locations one by one, storing intermediate results in memory. It will take time, but it will finally produce the same results. What the GPU brings to the table is speed. Its highly specialized instructions can do these kinds of tasks quickly and cut down the time fifty- or sixty-fold.

Let's say you're a programmer writing an application containing some parallel tasks. Then most of your application will run on a CPU, and only a small portion will be suitable for a GPU. If you want to use a GPU, then you will essentially write two separate programs, one for the CPU and a small one for the GPU.

Your CPU program will need to gather the data needed for the parallel portion of the application, give it to the GPU to execute, then take the results it produces and continue with the rest of the program. Hopefully all this trouble and time will be worth the speed-up you get on the GPU portion.

Most real-life applications don't need these kinds of parallel computations to be made faster. Even in cases where an application does need it, the GPU program is isolated away in code written by experts. The rest of the application prepares the inputs, sends them to the GPU, and gathers the outputs. Most computer programmers never actually program GPUs at all.

The GPU chip, which came of age during CGI for movies like The Abyss (1989) and Jurassic Park (1993), soon turned to conquer other applications: besides high-performance computing, it flew high on the cryptocurrency fad, where crypto mining demanded GPUs for speed.

Today in 2024, the champion consumers of GPUs are the big machine learning models, which have been all the rage for going on five years now. (I talked about neural networks in 2020; see When AI was old-fashioned.)

Training a deep neural network can be a formidable computational undertaking. It can take weeks to tune millions, even billions of parameters, repeatedly running the network and adjusting the weights over and over. The big companies who have built large datacenters to run these models have been buying GPU chips at a furious pace.

While money is no object and time is of the essence, the going is good.