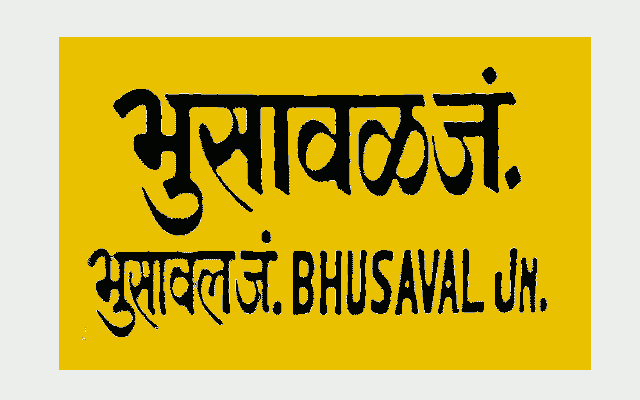

For college in India, I had to travel a thousand miles from home. It took over twenty hours by train.

As the train passed through one state after another, the hand-painted signs on railway stations started showing different languages.

Going north from home, this was the last Marathi-language sign I would be leaving behind.

Hand-writing signs is straightforward. But exchanging multi-language documents via computers is more work. This is the story of how the world's writing systems were tamed.

A document in a computer is just a list of numbers. At the most basic level, it represents each character in the language with a unique number.

American computers evolved to represent English-language documents using a standard mapping called the American Standard Code for Information Interchange, or "ASCII." This code assigns numbers between 0 and 127 to individual letters, numbers, punctuation, and other special characters in English.

Using the ASCII system, you save your text as follows.

The digits 0-9 get the codes 48-57.

The uppercase letters A-Z get 65-90.

Lowercase a-z get 97-122.

Punctuation and other special characters get the remaining codes. For example, the dollar sign $ gets the code 36.

Anyone you send a document to can easily read it, if their computer knows the mappings from numbers back to characters. In the olden days, pretty much the only people using computers to communicate were a few American scientists. ASCII was good enough for them.

But even other English-speaking countries couldn't use this code. (For Britain, how do you write a pound sign?)

As computer use spread, other countries started creating their own codes for their own writing systems. This meant that if you wanted to read a document created in some other country, you had to know what code they used so that you could interpret their numbers correctly.

Moreover, how would you write multiple languages in a document, like India's railway station signs? It was all a big mess.

In 1987, a few good people started a project to create a universal standard for assigning codes to all the writing systems in the world. The Unicode Consortium was incorporated as a nonprofit in 1991.

Representing writing systems with numeric codes is not a simple thing. The problem is not the computers— the problem is that human languages are not defined precisely enough to make such a system unambiguous.

You need to decide exactly what to call a "character" in each language, and how these characters are allowed to be put together in text.

Here's an example from English: the word "cooperate" can also be spelled coöperate. (Although nowadays no one except the hoity-toity New Yorker magazine uses the second spelling.)

Notice those two little dots ¨ called the diaeresis. It looks like the German umlaut, but in English, its function is to break up the pronunciation of a word like cooperate.

The question is: should the diaeresis be considered a separate character that's added on top of an "o," or should we consider just the ö a separate character?

Thousands of such questions pop up when you're trying to fit a writing system into rules that can be used by computers.

For ASCII the answer was: you cannot represent a diaeresis at all. But the Unicode Consortium wanted to do a better job. They reached out to language experts to help standardize their writing systems.

Thanks to their tireless efforts over the years, over 150 different scripts from all over the world are now included in the Unicode table. A total of over 140,000 numeric codes have been assigned to "characters" in Unicode version 13.0, defined in March 2020.

So now, a Chinese person typing on their phone can exchange messages with anyone in the world.

If you're reading this article from a non-English speaking country, your browser is making it possible because it understands Unicode.